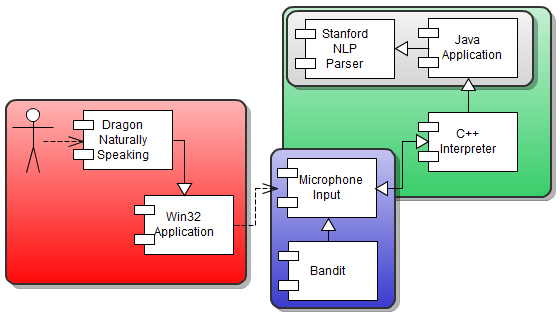

At last, I was able to resolve my longstanding issue with the microphone. No matter which settings I used, I wasn't able to get any microphone input into Dragon NaturallySpeaking. However, because I was getting no input on the Windows VM or Ubuntu, I guessed that it was a problem with the microphone settings rather than the virtual machine. It turned out, however, that not only was there a switch on the microphone to begin transmitting that I hadn't seen before, but I also had to specify which sound card should be used for microphone input via alsamixer on Ubuntu.

With this problem resolved, it didn't take Juan and I more than ten minutes to get Dragon up and running with Bandit and the Win32 application that I already wrote to interface with Dragon. Using the microphone, we were able to get my Repetition Game demo working this time with the microphone itself, rather than text input! This resolved the majority of the problems that I had been having over the past two weeks and made the demo much more attractive, despite a few lingering bugs. As a result I was able to present it as a part of the Interaction Lab demo session on the last day of RSS. The demo went well, and Juan and I were able to identify several areas for future work

Now that the microphone works, the demo is functional, and RSS is over, we want to work towards integrating the Stanford NLP Parser in order to extract contextual information from input received from the microphone. With this information, we will be able to teach Bandit to respond more intelligently to commands and other input received via the microphone, creating a more natural interaction between human and robot for future work. However, to do so, I will have to install the Stanford NLP Parser and write a Java application to interface between the parser and the existing Bandit code. This is the next phase of my project going into July.