Background: Bandit is a humanoid socially assistive robot currently being developed for physical rehabilitation therapy. Recent research in the USC Interaction Lab (Fasola, Matarić) focuses on studying the possible benefits of robotic therapy in the context of human-robot interaction (HRI). Further information about Bandit can be located here.

Project details: Although the primary form of interaction between human and robot in the current implementation of Bandit is visual, using an external webcam mounted on the Pioneer base, a Wiimote is presently used to communicate commands. In order to promote a more natural form of interaction, we are developing a speech-to-text system that allows the human player to communicate to Bandit via a Bluetooth microphone. Speech input will be parsed for parts of speech using the Stanford NLP Parser, then interpreted in order to facilitate a command learning module. Thus, rather than attempting to directly map speech input strings to command functions, we will be able to teach Bandit to interpret human speech more intelligently within the context of its domain.

Elimination of the Wiimote will ensure that all commands currently issued by the Wiimote to Bandit (Break, Yes, No) are now relegated to the speech-to-text system via a direct implementation. Bandit will also have the capacity to learn the player's name during a normal interaction, provided it is the first session of the exercise program. Further commands will be parsed and learned contextually by Bandit.

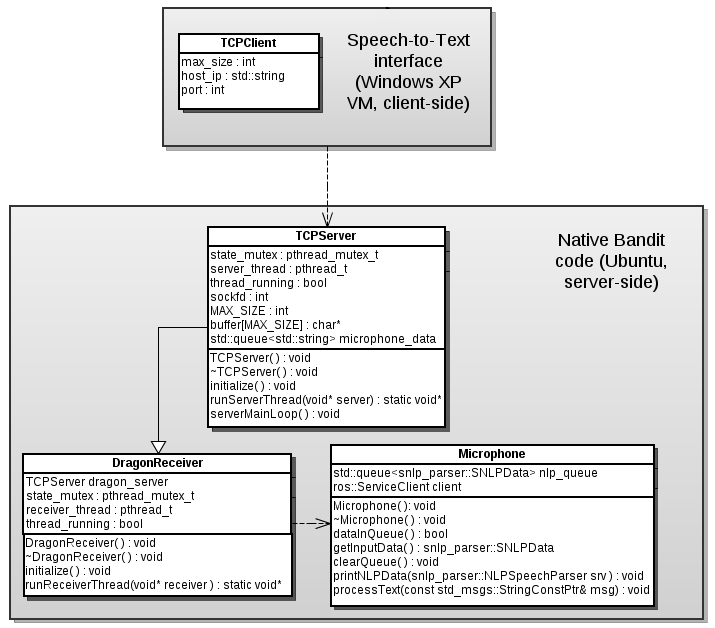

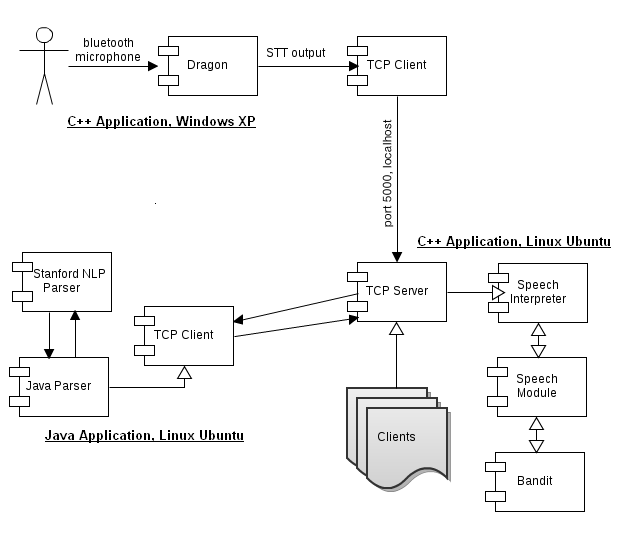

The initial speech-to-text system uses a modular approach to connect the Bluetooth microphone to the existing Bandit code. See Figure 2 below.

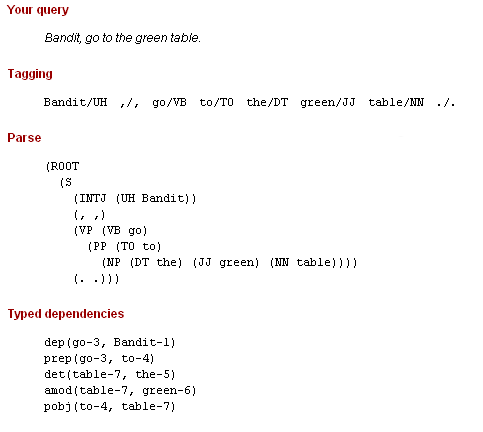

We created a Windows XP virtual machine running on the open-source virtualization software Virtualbox on a Ubuntu (10.10 Maverick) host machine. This virtual machine will execute Dragon NaturallySpeaking, a proprietary speech-to-text software that we are using as our primary solution for converting microphone input data to textual input data. Currently, we are using a Plantronics Calisto Headset with Bluetooth USB Adapter for our microphone. Once Dragon exports text, this text is redirected into a Win32 TCP client application running natively on the Windows VM. This TCP client is connected directly to a multithreaded TCP server running on Linux as a separate thread on Bandit. This server stores input and passes it to the dragon_sr object, which is responsible for maintaining input from the TCP server and ensuring they are processed correctly, then published as microphone_data. This input is then sent any node subscribing to microphone_data. From this node, data can either be directly processed or it can be sent to the snlp_parser server via ROS. In such case, the server will utilize the Stanford NLP parser to return relevant data about the input text to the client node for further handling (Figure 3).

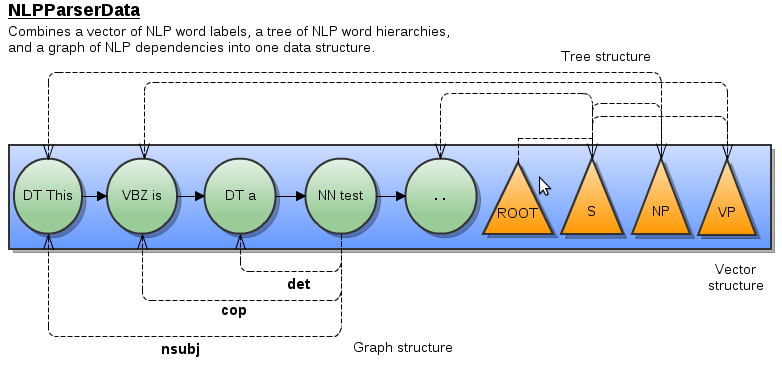

With these modules developed, we developed the necessary components to interface with the Stanford NLP parser API. First, because the Stanford NLP Parser is written in Java, a Java application was developed to directly access relevant NLP data. The application, which functions as a Java server, takes in input text from a C++ interpreter application that receives requests for NLP data via ROS and returns all NLP data (word labels, parser tree, graph dependencies) in textual format. The C++ interpreter inside the snlp_parser node is then responsible for converting any input text from the microphone into one data structure encapsulating all NLP information. This data structure can then returned to the ROS node requesting the data for further processing.

Current work: We are currently in the process of debugging and documenting all project code. Once all code is committed to the SVN repository, we will develop a final live demo demonstrating both the speech-to-text and NLP parsing systems integrating with the actual robot.

Reports:

Documentation: