CSCI 599 - Perceiving and Controlling Humanoid Behavior

CSCI 599

Perceiving and Controlling Humanoid Behavior

|

Taught: Spring 2001

If you are enrolled in this class, please check this page regularly to

find new or updated information.

Instructor

Office : SAL 228

Phone : (213) 740-4520

Email : mataric@cs.usc.edu

WWW : http://robotics.usc.edu/~maja

Office

Hours : by appointment; send email or talk w/ prof. after class

Time and place

Thursdays, 2pm-4:50pm, location: SAL 322.

Course description

This is a seminar-style hands-on survey course. We will read

papers covering two related topics: 1) control of humanoid robots and

simulations, and 2) perception and understanding of human and humanoid

(i.e., simulated and robotic) behavior.

This course is motivated by two currently surging areas of research:

humanoid robot and character control (relevant to both robotics and

graphics/animation), and perception and understanding of human and

humanoid behavior (relevant to machine vision, robotics, and AI).

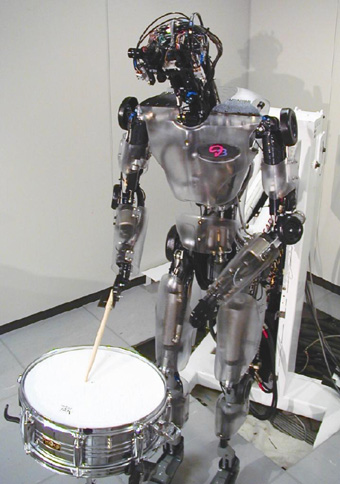

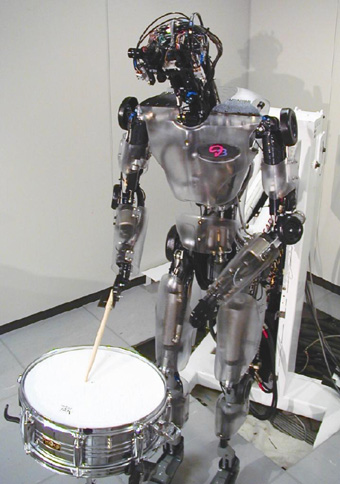

We are currently at the edge of a new frontier in robotics: the

technology has finally reached a stage where practical humanoid robots

can be built, as has been manifested by the Honda P3, the Sarcos

full-body robot, the MIT Cog (torso) robot, NASA's Robonaut, and many

others in Japan. As such highly complex system are becoming

available, how to control them becomes a major open research issue.

The challenge of humanoid control puts to the test classical notions

of robotics, as it demands scalability, robustness, and flexibility.

Approximately half of the reading material in thic course will deal

with this topic area.

Perceiving and understanding natural, unconstrained behavior of humans

is a complex problem, not only from the perspective of machine

vision, but from higher-level reasoning as well. The goal of that

area of research is to be able to understand the observed activity,

whether it be the movement of an individual (e.g., Mary is

performing a tennis serve), the interaction of a small group (e.g.,

one person is presenting, the rest are listening; there is a small

gathering forming), or the general behavior of a crowd (e.g., there is

a rally going on, the crowd is disbanding, etc.). Methods for

addressing these complex issues encompass the range from low-level

sensory processing and sensor fusion, to statistical models of

movement, to high-level models of intentionality. Approximately half

of the reading material in this course will be devoted to this topic

area.

This class consists of reading, presenting, discussing, and crituquing

papers. It also involves weekly short (one-page) reports on the

covered papers, and a final project, presented it to the class, and

accompanied by a final report. Presentations, critiques, reports, and

projects are all described in more detail below.

No textbook is used in this course; instead, we will read original

papers from the field, and discuss and critique them in class.

Students will take turns presenting and debating the readings, and

will turn in one-page summaries of the readings each week.

Prerequisites Undergraduate AI (e.g., CSCI 460) or graduate

AI (CSCI 561a) or Introduction

to Robotics (CSCI 445) or CSCI 584

or permission of instructor.

Reading List

Note: the following is a list of readings from which the course papers

will be drawn. The list below is not necessarily complete, but not

nearly all of the papers on the list will be read in the course. In

Spring 2001, two papers will be read per week, totalling 26 papers for

the semester.

Perception of human/oid motion

Visual

perception of biological motion and a model for its analysis by

G. Johansson (1973), Perception and Psychophysics 14, 201-211

Subconfigurations

of the human form in the perception of biological motion by J. Pinto,

M. Shiffrar, (1999), Acta Psychologica 102, 293-318

Efficient

Matching of Pictorial Structures by Pedro F. Felzenszwalb,

Daniel P. Huttenlocher, (2000), CVPR 2000

Towards detection of human motion by Y. Song, X. Feng,

P. Perona, (2000). [alternate

link]

Finding People

by Sampling by Sergey Ioffe and David Forsyth, (1999), International

Conference on Computer Vision

Articulated Body Motion Capture by Annealed Particle Filtering by

J. Deutscher, A. Blake, I. Reid, (2000)

Automatic

Joint Parameter Estimation from Magnetic Motion Capture Data by

J. O'Brien, B. Bodenheimer, G. Brostow, J. Hodgins

Real-time

closed-world tracking by S. Intille, J. Davis, A. Bobick, (1996)

M.I.T. Media Laboratory Perceptual Computing Section Technical Report

No. 403

Stochastic tracking of 3D human figures using 2D image motion by

H. Sidenbladh, M. Black, D. Fleet, (2000), European Conference on Computer

Vision, Dublin, Ireland

A

cluster-based statistical model for object detection by T. Rikert,

M. Jones, P. Viola, (1999), International conference on computer

vision

Complex

feature recognition: A Bayesian Approach for learning to recognize

objects by P. Viola, (1996), M.I.T. Artificial intelligence

laboratory

Exploiting

human actions and object context for recognition tasks by

D. Moore, I. Essa, M. Hayes, (1999), G.I.T, Graphics, Visualization

and Usability center, Technical report# GIT-GVU-99-11. [

alternative link]

Representation

and visual recognition of complex, multi-agent actions using belief

networks by S. Intille, A. Bobick, (1998), M.I.T. Media Laboratory

Perceptual Computing Section Technical Report No. 454

A

framework for recognizing multi-agent action from visual evidence

by S. Intille, A. Bobick, (1999), M.I.T. Media Laboratory Perceptual

Computing Section Technical Report No. 489

The

representation and recognition of action using temporal templates

by J. Davis, A. Bobick, (1997), M.I.T. Media Laboratory Perceptual

Computing Section Technical Report No. 402

Categorical

representation and recognition of oscillatory motion patterns by

J. Davis, A. Bobick, W. Richards, (2000), Pro. Comp. Vis. and

Pat. Rec. June 2000

Automatic

Generation of Kinematic Models for the Conversion of Human Motion

Capture Data into Humanoid Robot Motion, A. Ude; C. Man; M. Riley;

C. G. Atkeson , Proceedings, Humanoids 2000. [ technical

report version]

Coupled

hidden markov models for complex action recognition by Matthew

Brand and Nuria Oliver and Alex Pentland (1996), Proceeding, CVPR

1997

Monocular

perception of Biological motion-detection and Labeling by Y. Song

and L. Goncalves and E. Bernardo and P. Perona (1999), ICCV 99

Monocular

perception of biological motion-clutter and partial occlusion by

Y. Song and L. Goncalves and P. Perona (2000), Proceeding ECCV

2000

Eigenfaces

for recognition by M. Turk and A. Pentland (1991), MIT

Pfinder:

Real-time tracking of the human body by C. Wren and

A. Azarbayejani and T. Darrel and A. Pentland (1996), WFGR 96

A 3-D model-based tracking of humans in action: a multi-view

approach by D. Gavrila and L. Davis (1996), CVPR96

Real-time human

motion analysis by image skeletonization, H. Fujiyoshi and

A. Lipton (CMU). [alternate

link]

Control of human/oid motion

Quantitative examinations of internal representations for Arm

Trajectory Planning: Minimum commanded torqe change model by E. Nakano

and H. Imamizu and R. Osu and Y. Uno and H. Gomi and T. Yoshioka and

M. Kawato (1999), The American Physiological Society 99

Hierachical multiple paired forward-inverse models for generation and

perception of motor sequences, by M. Haruno and K. Doya and

D. Wolpert and M. Kawato (2000)

Multiple paired forward-inverse models for sensorimotor learning

and control, by M. Haruno and D. Wolpert and M. Kawato (2000)

Making Complex Articulated Agents Dance: an analysis of control

methods drawn from robotics, animation, and biology, by Maja J

Mataric, Victor B. Zordan, and Matthew M. Williamson, Autonomous

Agents and Multi-Agent Systems, 2(1), Mar 1999, 23-44.

Style

Machines by Matthew Brand and Aaron Hertzmann (2000), MERL,

TR-2000-14

Impedance

Control as a Merging Mechanism for a Behavior-Based Architecture

by G.Beccari and S. Stramigioli, Proceedings, IEEE ICRA, 1998.

Linear

combination of primitives in vertebrate motor control by

F. Mussa-Ivaldi, S. Giszter, and E. Bizzi, PNAS, Vol 91, Aug 1994.

Impedance Control: An Approach to Manipulation, N. Hogan,

Journal of Dynamic Systems, Measurement, and Control, Vol 107, March

1985.

The coordination of arm movements; an experimentally confirmed

mathematical model, T. Flash and N. Hogan, Journal of Neuroscience,

Vol 7, 1985.

A

neurobiological perspective on humanoid robot design by

S. Giszter, K. Moxon, I. Rybak, and J. Chapin, IEEE Intelligent

Systems, July/Aug 2000.

Aminating

human athletics, J. Hodgins, W. Wooten, D. Brogan, and J. O'Brian,

SIGGRAPH 1995.

Computation aspects of motor control and motor learning,

M. Jordan, Handbook of Perception and Action: Motor Skills, H. Huer

and S. Keele, eds, 1993.

Programmable

Pattern Generators, S. Schaal and D. Sternad, Proceedings, 3rd

Int. Conference on Coputational Intelligence in Neuroscience,

1998.

A computational theory for movement pattern recognition based on

optimal movement pattern generation, Y. Wada, Y. Koie, E. Bateson,

and M., Biological Cybernetics, Vol 73, 1995.

Rhythmic

robot control using oscillators, M. Williamsn, Proceedings IROS

1998.

Tracking

and Modifying Upper-body human motion data with dynamic simulation

by V. Zordan, J. Hodgins

On the Emulation of Natural Movements by Humanoid Robots,

M. J. E. Richardson; T. Flash, Proceedings, Humanoids 2000.

Spring 2001 Syllabus

Note: please bring the readings to class on the assigned day

Jan 11 :

Introduction to the course, reading assignments.

Jan 18 :

Articulated

Body Motion Capture by Annealed Particle Filtering by

J. Deutscher, A. Blake, I. Reid, (2000); Presenter: Chad Jenkins.

Automatic

Joint Parameter Estimation from Magnetic Motion Capture Data by

J. O'Brien, B. Bodenheimer, G. Brostow, J. Hodgins. Presenter: Ajo Fod.

Jan 25 :

Visual

perception of biological motion and a model for its analysis by

G. Johansson (1973), Perception and Psychophysics 14, 201-211. [video]

Presenter: Michael Nili.

Making Complex Articulated Agents Dance: an analysis of control

methods drawn from robotics, animation, and biology, by Maja J

Mataric, Victor B. Zordan, and Matthew M. Williamson, Autonomous

Agents and Multi-Agent Systems, 2(1), Mar 1999, 23-44. [more info and

videos] Presenter: Brian Gerkey.

Feb 1 :

Linear

combination of primitives in vertebrate motor control by

F. Mussa-Ivaldi, S. Giszter, and E. Bizzi, PNAS, Vol 91, Aug

1994. Presenter: Tin Nguyen.

A

neurobiological perspective on humanoid robot design by

S. Giszter, K. Moxon, I. Rybak, and J. Chapin, IEEE Intelligent

Systems, July/Aug 2000. Presenter: Daniel Arbuckle.

Feb 8 :

Exploiting

human actions and object context for recognition tasks by

D. Moore, I. Essa, M. Hayes, (1999), G.I.T, Graphics, Visualization

and Usability center, Technical report# GIT-GVU-99-11. [

alternative link]. Presenter: Monica Nicolescu.

Impedance

Control as a Merging Mechanism for a Behavior-Based Architecture

by G.Beccari and S. Stramigioli, Proceedings, IEEE ICRA,

1998. Presenter: Chad Jenkins.

Feb 15 :

-->Background reading material on HMMs:

An HMM tutorial; another HMM tutorial.

Coupled

hidden markov models for complex action recognition by Matthew

Brand and Nuria Oliver and Alex Pentland (1996), Proceeding, CVPR

1997. Presenter: Chad Jenkins.

Style

Machines by Matthew Brand and Aaron Hertzmann (2000), MERL,

TR-2000-14. Presenter: Ajo Fod.

Feb 22 :

Subconfigurations

of the human form in the perception of biological motion by

J. Pinto, M. Shiffrar, (1999), Acta Psychologica 102,

293-318. Presenter: Michael Nili.

A 3-D model-based tracking of humans in action: a multi-view

approach by D. Gavrila and L. Davis (1996), CVPR96. Presenter:

Tin Nguyen.

Mar 1 :

Representation

and visual recognition of complex, multi-agent actions using belief

networks by S. Intille, A. Bobick, (1998), M.I.T. Media Laboratory

Perceptual Computing Section Technical Report No. 454. Presenter:

Michel Nili.

Towards

detection of human motion by Y. Song, X. Feng, P. Perona,

(2000). [alternate

link] Presenter: Daniel Arbuckle.

Mar 8 : Project proposals due in class.

The

representation and recognition of action using temporal templates

by J. Davis, A. Bobick, (1997), M.I.T. Media Laboratory Perceptual

Computing Section Technical Report No. 402. Presenter: Monica

Nicolescu.

Real-time human

motion analysis by image skeletonization, H. Fujiyoshi and

A. Lipton (CMU). [alternate

link]. Presenter: Brian Gerkey.

Mar 15 : No class, Spring Break.

Mar 22 : no class, out of town, readings and

presentations are added to classes below

Mar 29 :

Computation aspects of motor control and motor learning,

M. Jordan, Handbook of Perception and Action: Motor Skills, H. Huer

and S. Keele, eds, 1993. Presenter: Ajo Fod.

The coordination of arm movements; an experimentally confirmed

mathematical model, T. Flash and N. Hogan, Journal of Neuroscience,

Vol 7, 1985. Presenter: Michael Nili.

On the Emulation of Natural Movements by Humanoid Robots,

M. J. E. Richardson; T. Flash, Proceedings, Humanoids 2000. Presenter:

Monica Nicolescu.

Apr 5 :

Categorical

representation and recognition of oscillatory motion patterns by

J. Davis, A. Bobick, W. Richards, (2000), Pro. Comp. Vis. and

Pat. Rec. June 2000. Presenter: Amit Ramesh.

Programmable

Pattern Generators, S. Schaal and D. Sternad, Proceedings, 3rd

Int. Conference on Coputational Intelligence in Neuroscience,

1998. Presenter: Amit Ramesh.

Apr 12 :

Rhythmic

robot control using oscillators, M. Williamsn, Proceedings IROS

1998. Presenter: Tin Nguyen.

Improved Tracking of Multiple Humans with Trajectory Prediction and

Occlusion Modeling, by R. Rosales and S. Scaroff

(1998). Presenter: Michael Nili and Tin Nguyen.

Apr 19 : no class, out of town, readings and

presentations are added to next class, below

Apr 26 :

Automatic

Generation of Kinematic Models for the Conversion of Human Motion

Capture Data into Humanoid Robot Motion, A. Ude; C. Man; M. Riley;

C. G. Atkeson , Proceedings, Humanoids 2000. [ technical

report version]. Presenter: Daniel Arbuckle.

Tracking

and Modifying Upper-body human motion data with dynamic simulation

by V. Zordan, J. Hodgins. Presenter: Brian Gerkey.

Multiple paired forward and inverse models for motor control, by

M. Haruno and K. Doya and D. Wolpert and M. Kawato (2000). Presenter:

Amit Ramesh.

May 8 : Final: project presentations, 2-3pm.

May 10 : Final: project presentations, 2-5pm.

Presentations, Critique, and Weekly Reports

The student presenting a paper in a given class should prepare a clear

30-minute presentation. This presentation should assume that the

audience has read the paper, and not spend more than about 5 minutes

summarizing it. Most of the presentation should be spent on

discussing the paper, its strengths, weaknesses, any points needing

clarification, and addressing any questions the other students may

have.

For information on how to present a paper, look here

and for even more detail, look here.

In this course, formal presentations are not expected. However, you

are expectd to prepare at least an organized board presentation, or

for involved papers, overheads; projected presentations are optional

but may be more clear and manageable for some papers. Please work with

Prof. Mataric's assistant, Kusum Shori (kusum@pollux.usc.edu) ahead of

time to arrange for the necessary equipment you will need to present

your paper.

For each paper being covered on a given day (whether you are

presenting it or not), you will bring to class a one-page report,

consisting of two parts: 1) a paragraph or two summarizing the paper

(copies of the abstract and/or intro and/or conclusions will not do!);

2) two or so paragraphs critiquing the paper. The critique should

take the form of addressing the strengths and weaknesses of the paper,

comparing it briefly to other work, if relevant, etc.

In summary, for each class, you should bring: 1) the two papers being

discussed, 2) two reports, one for each of the papers, 3) a

presentation, if you are presenting one of the papers, 4) a great

enthusiasm for discussing the two papers. :)

Course Project

The principles learned in class will be applied in individual

programming projects, either employing humanoid perception or control.

For the former, the students are encouraged to either seek out on-line

vision databasees, or obtain input data in the lab (talk to the

instructor early). For the later, a simulation testbed will be made

available (again, talk to the instructor early). Project proposals

describing specific goals will be due in the middle of the semester

(see syllabus). A detailed

final paper is a required part of the final project; it will be

due on the last day of

presentations, and it will be made available to all students in

the class, preferrably via a Web pointer.

Helful information for preparing your project presentation can be

found

here, and for preparing your final paper here.

Grading

Performance in the class will be based on participation in class

discussions, presentations of the papers, weekly reports on the

readings, and a final project (with an associated paper and

demonstration). Regular attendance and participation is required; if

you need to miss a class, please speak to Prof. Mataric ahead of

time.

Specifically, final course grades will consist of:

20% reading reports

20% in-class discussion participation

30% in-class paper presentations

30% final project implementation and final paper

Related Web Sites and Readings

A recent article on the new

Sony humanoid robot.

Sarcos.

The ATR DB humanoid.

The Wasseda University Wabian

humanoid.

The MIT Cog humanoid torso.

The NASA Robonaut.

The first IEEE/RAS Humanoids

Conference 2000.

(These are mostly simulations of mobile

robots. Talk to Prof. Mataric about humanoid simulation if needed for

the course project.)

U.S.C. Computer Science Department

U.S.C.

Back to top