Implementation

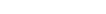

My algorithm begins with an input alphabet which can be any set of discrete concepts - numbers, letters, states of a robot - plus the prior probabilities of each element of the input alphabet.

The algorithm generates an initial set of symbols and then proceeds to optimize a measure of symbol fitness (described briefly below) via simulated annealing. When the algorithm converges, the final symbols are assigned to the input alphabet with an entropy encoding.

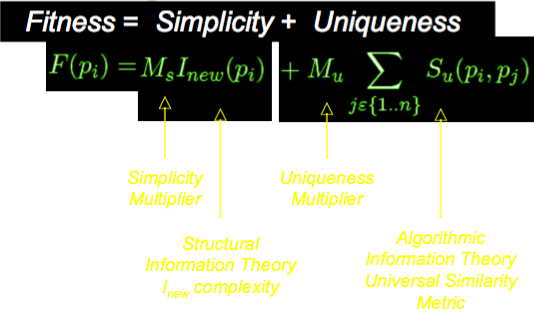

The algorithm is attempting to maximize the fitness measurement summarized above. The fitness function has two elements. The first, simplicity, is derived from Structural Information Theory and encourages symbols to be as simple as possible (as perceived by humans). The second element is uniqueness, which is based on the Algorithmic Information Theory Universal Similarity Metric and encourages symbols to be as distinct from each other as possible. Optimizing both of these elements is challenging because they are correlated concepts.