|

|

| |

|

||||||||||||

| |

|

|

|

|

|

|

|

|

|

||||

| |

|

|

|

|

|||||||||

| |

|

|

|

|

|

|

|||||||

| |

|

|

|

|

|||||||||

|

Current Projects |

|

|

Aerial Deployment |

|

|

This research deals with using the autonomous helicopter to precisely deploy objects at pre-specified GPS locations. Either a single pre-surveyed location or a set of way-points can be specified at which to deploy the objects. For accurate deployment, the motion of the helicopter is taken into consideration, and the correct deployment position is actively estimated using Newton's laws of motion. Experimental results from flight tests show that the deployment is consistent and accurate to approximately 1.5 meters. Currently we are in the process of extending it to vision based deployment. This would involve detection of a target, tracking of the target and then autonomous deployment. |

|

Autonomous Landing |

|

|

This work deals with the design and implementation of a real-time, vision-based landing algorithm for an autonomous helicopter. The landing algorithm is integrated with algorithms for visual acquisition of the target (a helipad), and navigation to the target, from an arbitrary initial position and orientation. We use vision for precise target detection and recognition, and a combination of vision and GPS for navigation. The helicopter updates its landing target parameters based on vision and uses an onboard behavior-based controller to follow a path to the landing site. Results from flight trials in the field demonstrate that our detection, recognition and control algorithms are accurate, robust and repeatable. |

| 3D Navigation | |

|

The aim of this research is to allow the helicopter to detect obstacles in the environment, and avoid them. This capability is vital when flying autonomously in environments such as built-up urban areas. Our goal is to have the helicopter fly autonomously down an 'Urban Canyon' without colliding into any buildings. Such a capability would make tasks such as urban search and rescue and surveillance possible. Optic-flow and stereo-vision based techniques are being employed to achieve this goal.

|

| Emulating Spacecraft Dynamics on a Autonomous Helicopter | |

|

|

The next generation of Martian landers (2007 and beyond) will employ precision soft-landing capabilities based on vision. These algorithms will have to be tested with extensive descent imagery in Mars-analog terrain on Earth. To enable this, we propose a novel technique for testing spacecraft landing algorithms during the terminal landing phase. We propose the use of a model helicopter to emulate the landing dynamics of a spacecraft. Our controller accepts thruster inputs (like those on a spacecraft) and converts them into appropriate helicopter stick controls such that the resulting trajectory of the helicopter is close to the trajectory that would have been achieved by simply providing the same thruster inputs to a spacecraft. The approach relies on simplified models of the spacecraft and helicopter dynamics. Initial results in simulation indicate that the approach is feasible, with tracking accuracies on the order of 5 meters. |

| A Simulation Testbed for Helicopter Research | |

|

We have developed a helicopter simulator which includes a full dynamics model of the Bergen Industrial Twin RC Helicopter. It also simulates the RC servos and includes a wind model to simulate various natural wind effects. We plan to use the simulator to test various kinds of controllers (both classical PID techniques as well as more advanced LQR/LQG techniques). Also the simulator is an ideal platform for testing various path planning trajectory planning algorithms before implementing on the real helicopter. The graphics engine is written in OpenGL and the entire simulator is written in C, using GNU Scientific Library. The models of the Helicopter used in this simulator have been taken from http://autopilot.sourceforge.net |

| Stealthy Fixation | |

| |

The aim of this research is to develop techniques that allow the helicopter to track a target or number of targets in a stealthy way. For example, when following a ground robot, the helicopter should always stay positioned behind it (assuming the target has forward facing sensors). |

| RAPTOR: Research on Aerially Precise Teams of Robots | |

|

The aim of the RAPTOR project is to develop a small autonomous helicopters platform, and then use a team of such helicopters to fly in formation and perform other team-oriented tasks. Progress has been made in producing the lightweight electronics needed for this application, and experiments on ground robots and in simulation have been used to test various formation flight algorithms. |

| Moving Target Detection and Tracking from the Air | |

|

Robust detection of moving objects from a mobile robot is not easily achievable since there are two motions involved: the motions of moving objects and the motion of the sensors used to detect the objects. We have experimented with a probabilistic approach for moving object detection from a mobile robot using a single camera in outdoor environments. The ego-motion of the camera is compensated using corresponding feature sets and outlier detection, and the positions of moving objects are estimated using an adaptive particle filter and EM algorithm. |

| Urban Feature Detection and Tracking | |

|

When flying in urban environments, it may be necessary to detect and monitor certain features, such as windows and doorways. By processing the image from a single camera, we are currently able to detect windows. Once a user has designated a certain window shown in the image, the window can be tracked over time. Our next goal is to use this information to control the helicopters such that it stays in position in front of the designated window. |

|

Past Projects |

|

| Air-ground Cooperation | |

|

This research was done to explore and demonstrate various ways in which ground and air based robots could cooperate to achieve a goal. For example, a helicopter could be used to deploy a smaller wheeled robot to explore areas that are not visible from the air (under cars or other such obstacles). An aerial robot could also cooperate with ground robots to better localize itself, given the ground robots have good localization. |

| Learning Helicopter Control from a Human Pilot | |

|

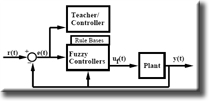

This research addressed the helicopter controller synthesis and tuning problem. A model-free 'teaching by showing' approach was used to train a fuzzy-neural controller for autonomous helicopters, in simulation and hardware. A controller was generated using training data gathered while a teacher operated the helicopter. In Simulation, controllers developed for roll and pitch were capable of meeting the performance criteria. In hardware however, the roll controller could not meet the desired performance criteria. |

| Behavior-based Control of a Robot Helicopter | |

|

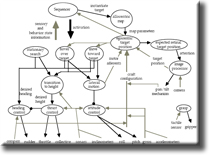

This research involved developing a behavior based controller for the helicopter. Low-level behaviors were developed to independently control the attitude, heading and thrust of the helicopter. Mid-level behaviors included lateral motion and transition to height, while high-level behaviors included move toward target and hover over target. This early work laid the foundation of the controller that is still used in the latest generation AVATAR. |

|

webmaster Last modified: May 29 2006

|

|