Introduction and Significance

To successfully engage in social interactions with humans, artificial communicators such as embodied conversational agents (ECAs) or sociable robots should provide communicative functionality that is both natural and intuitive. Representing an integral component of human communication, co-verbal gestures are frequently modeled and implemented to extend the non-verbal expressiveness of sociable agents.

The work of two research communities is most relevant to this full-day workshop: firstly, in the area of computer animation, researchers have developed frameworks to realize multimodal communication behavior in virtually embodied conversational agents; secondly, in the field of robotics, researchers have explored various approaches to generate non-verbal behaviors along with speech in sociable robots. The challenges are similar in that both research areas demand a high degree of control and flexibility so that human-like motion can be adapted to a system with non-human kinematics. The levels of complexity encountered in each field, however, are not equivalent. Although the range of different body types found in virtually embodied agents is manifold and hence challenging, character animation has less restrictive motion than even the most state-of-the art humanoid robots. For example, animation of virtual agents reduces or even eliminates the problems of handling joint and velocity limits; in a robot body, however, these have to be explicitly addressed given real physical restrictions.

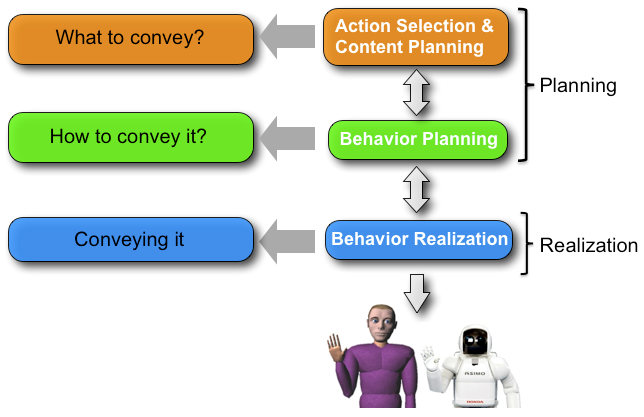

Generally, computational approaches to synthesizing multimodal behavior can be modeled as three consecutive tasks as illustrated in Figure 1: firstly, determining what to convey (i.e., content planning); secondly, determining how to convey it (i.e., behavior planning); finally, conveying it (i.e., behavior realization). These tasks can be outlined as two major stages of behavior generation: planning and realization. In an attempt to identify intersections between the two above mentioned research fields, approaches on both the planning and the realization levels will be considered and discussed within the scope of this workshop.

|

Relevance to the Main Conference and Workshop Objectives

This full day workshop will elicit participation from those interested in the general conference themes of multimodal interaction design and system development. Specifically, it aims to bring together researchers from the embodied conversational agent (ECA) and sociable robotics communities to spark discussion and collaboration between the related fields. The focus of the workshop will be on co-verbal behavior production — specifically, synchronized speech and gesture — for both virtually and physically embodied platforms. The workshop will elucidate the subject in consideration of aspects regarding planning and realization of multimodal behavior production. Topics discussed in the workshop will highlight common and distinguishing factors of their implementations within each respective field. An interactive panel discussion will provide participants with the opportunity to learn about related state-of-the-art research from a group of experts with diverse backgrounds. The breakout session as part of the workshop aims to focus on the definition and development of common standards for both the specification and realization of multimodal behaviors. Ideally, such standards should target solutions that are suitable for both ECAs and sociable robots by identifying interfaces that can bridge the gap between these unequally embodied platforms.