The goal of my research is to employ user-centered methods to enable humans and robots to safely and seamlessly work alongside each other. In my work, I have explored several interaction scenarios in which humans can take on various roles, such as bystander, collaborator, or operator. Success across these various types of interaction require a deep understanding of human behavior. Thus, a fundamental component of my research involves modeling human users to better design robotic systems and behavior. This work lies at the intersection of computer science, design, and psychology and requires the use of both qualitative and quantitative techniques.

For more information on research that took place before 2012, please refer to my publications.

Nonverbal Signals for Human-Robot Collaboration

Collaboration can take on many different forms ranging from simple communication to highly coordinated physical tasks. The goal of my doctoral research is to enable robots to act as effective and intelligent collaborators at various levels of interaction. Towards this goal, we explore two distinct challenges of robot communication: 1) the design of nonverbal signals for robot communication across different platforms and 2) the development of models for deciding optimal times to communicate with humans. Communicating information about a robot's internal state enables humans to better understand and predict the robot's behavior. This research primarily focuses on light and sound signals and has been applied to mobile robots, telepresence systems, and free-flying robots.

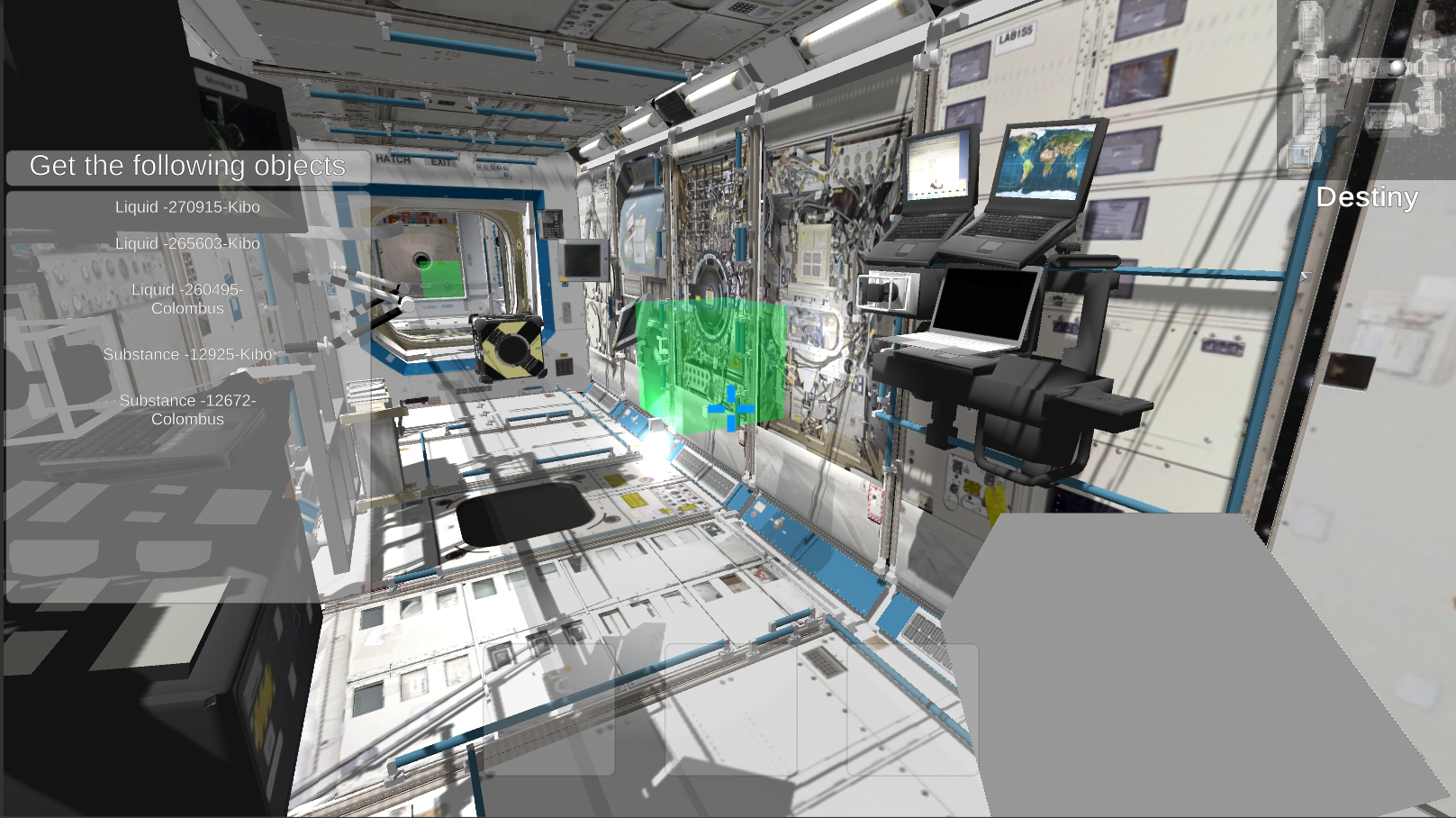

Virtual Simulation of Human-Robot Interactions on the ISS

Robots present many opportunities for providing assistance during future space exploration. Since these robots are expected to operate with and around humans in the space station, it is necessary to explore and validate different robot behaviors that facilitate interaction with humans at various levels. Virtual environments enable researchers to simulate the interaction conditions of space, which are difficult to replicate on earth, and to gather data in advance of robot deployment. We are currently developing a 3D Unity simulation to test a free flying robot’s communication behaviors with astronauts located on the International Space Station (ISS).

Developing Robot Control Models for Social Touch Models

Humans utilize physical social contact for a number of purposes, such as providing reassurance, reducing anxiety, and expressing concern. More importantly, social touch creates valuable bonds between people that play a large role in our interactions. In this work, we seek to develop models of social touch that can be applied to robot manipulators.

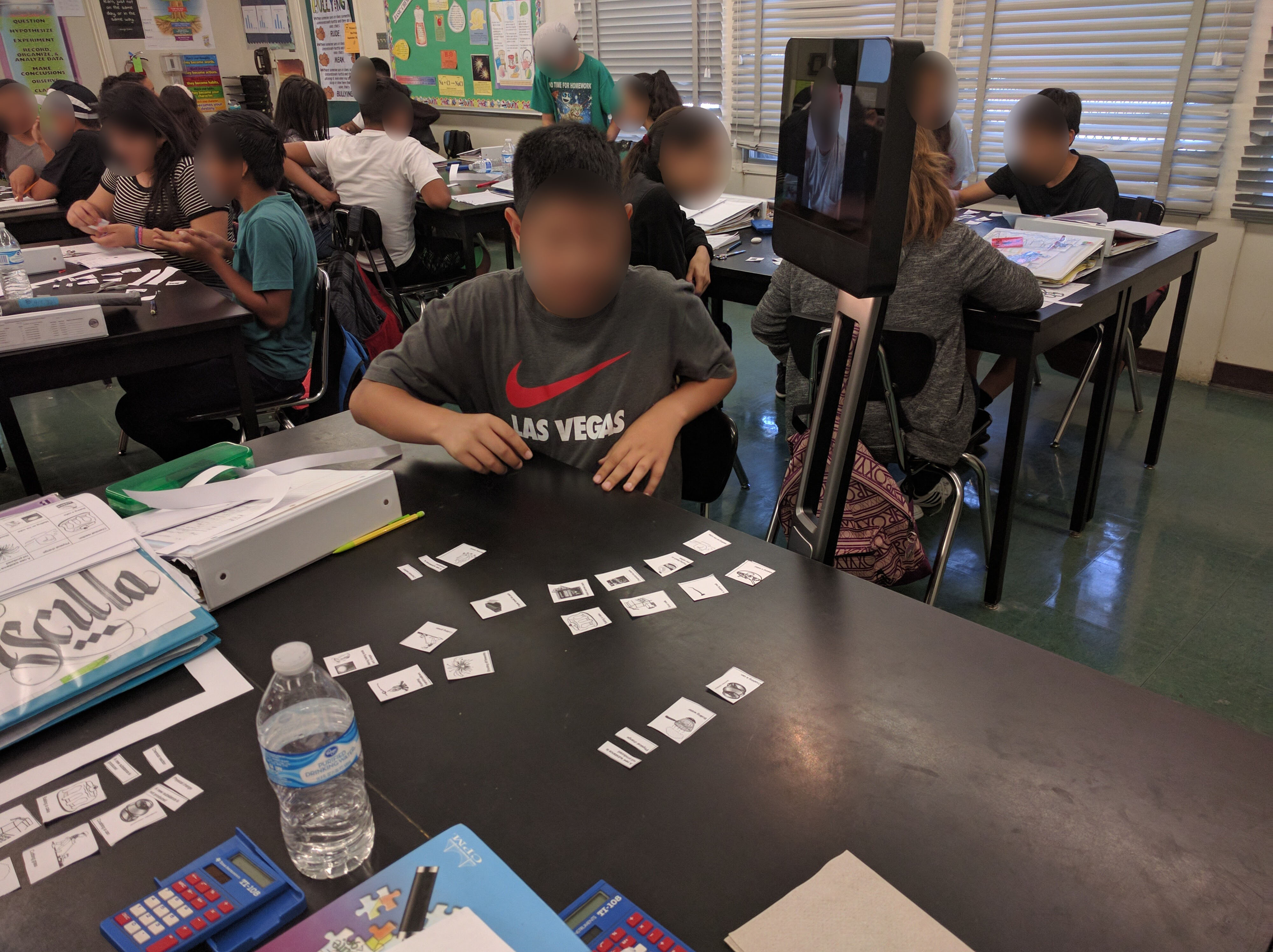

Telepresence Robots for Increased Access to Education

Telepresence robots have the potential to improve access to K-12 education for students who are unable to attend school for a variety of reasons. Since previous telepresence research has largely focused on the needs of adult users in workplace settings, it is unknown what challenges must be addressed for these robots to be effective tools in classrooms. In this work, we seek to identify and address challenges for telepresence robot usage in the classroom when operated by a remote student. Toward this goal, we conducted field sessions in which four designers operated a telepresence robot in a real K-12 classroom to identify key research challenges and present design insights meant to inform the HRI community in particular and robot designers in general. We also conducted a user study exploring the effects of personalization of robot appearance on operators' perceptions of embodiment, presence, and ownership of the robot. Lastly, we are currently conducting a multi-session user study comparing students' educational experience when utilizing a telepresence robot with in-person classroom attendance and traditional distance learning.

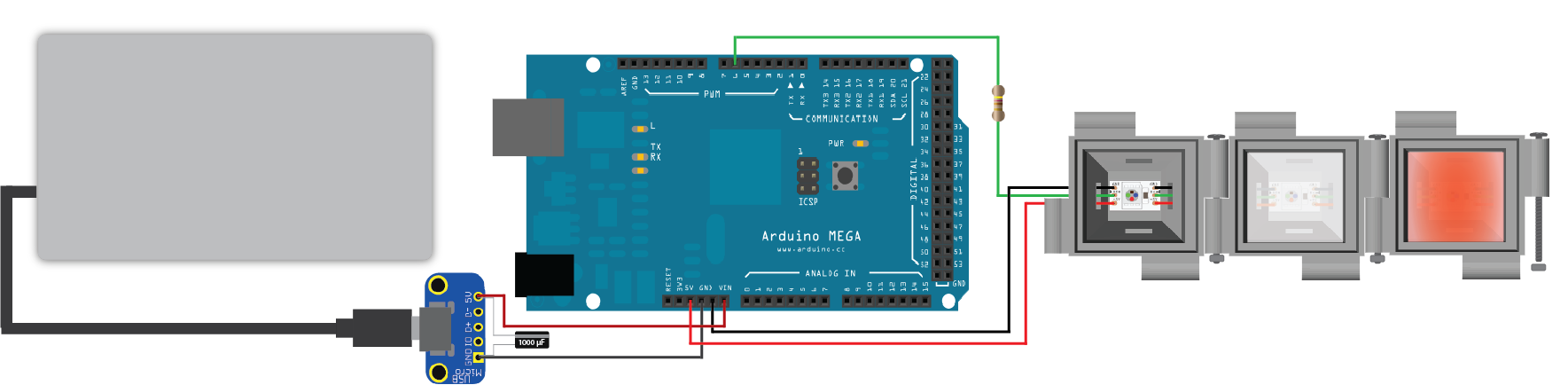

Designing a Modular Light Signaling Tool for Human-Robot Interaction

Recent work has shown the potential for lights to act as simple, yet expressive signaling mechanisms for use in a variety of human-robot applications. However, the wide range of robot shapes and sizes makes it difficult for researchers to quickly prototype and evaluate different light configurations and signal designs. In this work, we designed ModLight, a modular research tool consisting of a set of low cost light blocks that can be easily reconfigured to fit a myriad of robots and applications. ModLight also provides researchers, designers, and students with open-source software tools that enable them to visually design new signals and easily integrate them into existing systems. This work also serves to motivate the need for further research in developing light behaviors for use in human-robot interaction.

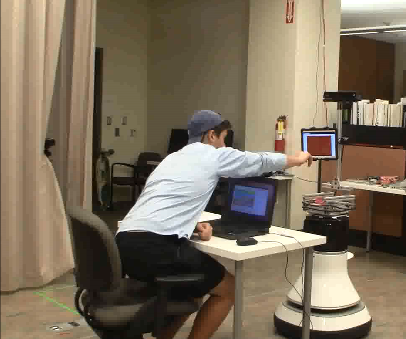

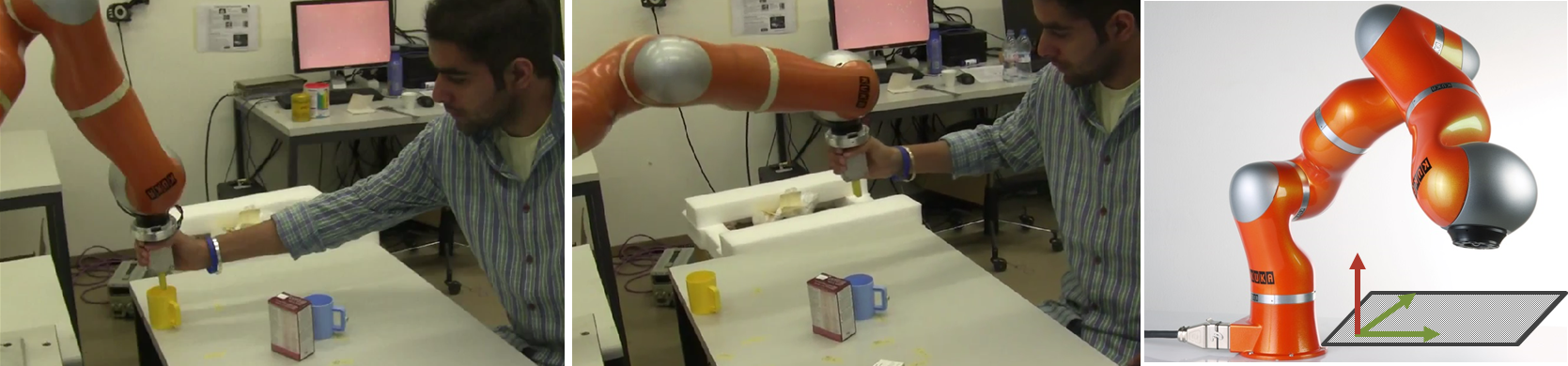

Demonstration Interfaces for Teaching Robot Motion Models

The success of a Learning from Demonstration system depends on the quality of the demonstrated data. Kinesthetic demonstrations are often assumed to be the best method of providing demonstrations for manipulation tasks; however, there is little research to support this. In this work, we explored the use of a simulated environment as an alternative to and in combination with kinesthetic demonstrations when using an autonomous dynamical system to encode motion. We performed a user study comparing three demonstrations interfaces for a manipulation task on a KUKA LWR robot and found .

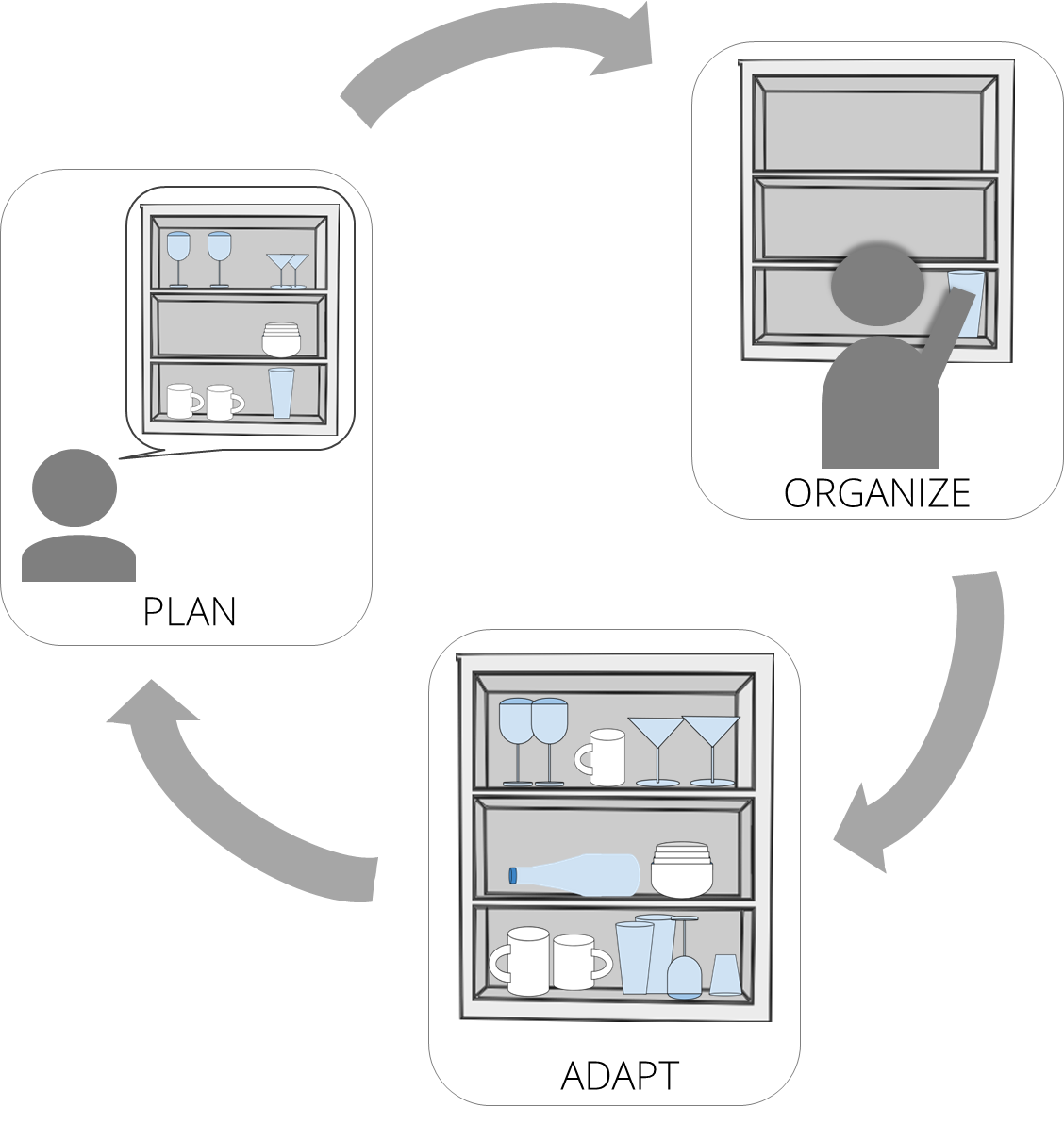

Robots in the Home: Qualitative and Quantitative Insights into Kitchen Organization

In the future, we envision domestic robots to play a large role in our everyday lives. This requires robots able to anticipate our needs and preferences and adapt their behavior. Since current robotics research takes place primarily in laboratory settings, it fails to take into account real human users. In this work, we explored how organization occurs in the kitchen through an exploratory in-home study. Our analysis includes qualitative insights towards robot behavior during kitchen organization, an open source dataset of real life kitchens, and a proof-of-concept application of this dataset to the problem of object return.

Perceived Robot Capability

Robotics research often focuses on increasing robot capability. If end users do not perceive these increases, however, user acceptance may not improve. In this work, we explored the idea of perceived capability and how it relates to true capability, differentiating between physical and cognitive robot capabilities. We developed a framework that outlined their potential relationships and performed two user studies, on robot speed and speech, exploring these relationships. Our studies identified two possible consequences of the disconnect between the true and perceived capability: (1) under-perception: true improvements in capability may not lead to perceived improvements and (2) over-perception: true improvements in capability may lead to additional perceived improvements that do not actually exist.