|

Region-based Approach: Multi-Target Tracking with Multiple Robots

|

|---|

|

| Motivation |

|---|

Autonomous target tracking where targets are tracked using a network

of sensors has many potential applications; for example, surveillance,

security, etc. We are particularly interested in the case where the

sensor network used for tracking is partially mobile i.e. some members

of the network have the ability to re-position themselves in response

to target motion. Such mobile robot-based trackers are attractive for

two reasons:

- They can potentially reduce the overall number of sensors

needed in the tracking network.

- They can adapt to the movement of the targets.

Formally, we are interested in the problem of tracking multiple

anonymous targets in a bounded planar environment using a network of

communicating robots and stationary sensors. The key problem is to

develop an online, coordinated, motion strategy for robot positioning

that leads to a high degree of coverage of the environment.

|

|

| Approach |

|---|

Our approach to the problem is to divide the environment into

topologically simple constituents called regions. The following figures

show the map of the second floor of our Computer Science Building

(which consists of two long, narrow corridors and two open offices) and

the corresponding topological map.

|

|

| Figure 1: Computer Science Building |

Figure 2: Correspoding Topological Map |

Given a topological map, every robot independently maintains two density

estimates (Robot Density and Target Density) for each region

indicating the estimated number of targets in a region relative to

the size of the region. The region-based approach controls robot

deployment at two levels:

- a coarse level of control causes robots to distribute themselves

across regions depending on the density estimates.

- Within a region robots employ other strategies to look for and

follow targets.

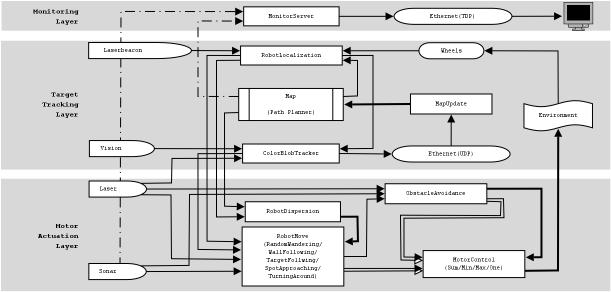

A behavior-based control system has been developed implementing the Region-based Approach. The following figure shows the control architecture of the system.

Figure 3: Control Architecture of the Behavior-based System

Refer to relavant papers for more details.

|

|

| Experiments |

|---|

|

To evaluate our approach, various experiments are perfomed with

ActivMedia Pioneer DX-2 robots and

the Player/Stage software.

Pioneer robots equipped with a SICK laser rangefinder and a Sony PTZ camera

are used for tracking, and each target is a Pioneer robot carrying

a bright-colored cylinder.

|

|

| Figure 4: Tracking robot |

Figure 5: Target |

The following topics are being studied through simulation and

real-robot experiments:

- General performance of the Region-based Approach compared to

a 'naive' strategy

- Correlation between the shapes of environments and the performance

of the Region-based Approach

- Search for proper urgency estimates, i.e. visibility maximization

- Optimal ratio of mobile robots to stationary sensors for a given

environment

Refer to relavant papers for more details.

|

|

| Video Clips |

|---|

|

Laser-based Tracker (AVI, 3.2MB)

The clip shows the output of a laser rangefinder and

the interpretation result. In the upper window, the yellow line

is a latest reading, the red line is a previous reading,

and the green line is a segmentation result.

In the lower window, the red line is a difference of conjecutive

readings, and the blue line indicates candidate regions of

moving targets. The intersection of the candidate regions

and the segmented regions are interpreted as moving targets.

|

|

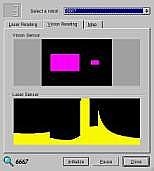

Vision-based Tracker (AVI, 1.9MB)

The clip shows the output of a vision sensor and a laser rangefinder.

A vision sensor is used to track bearings of moving targets,

and a laser rangefinder is used to measure the distance to the

moving targets. The upper window shows the output of a vision

sensor (moving targets shows in purple), and the low window

shows the output of a laser rangefinder.

|

|

Mobile Robot Tracker (AVI, 39.2MB)

The clip shows how the mobile robots track multiple targets.

The robot is using the vision-based tracker, and you can

observe the robot loosing targets once because it is totally reactive

system in terms of tracking. You can also see targets' movement; they

shows wall-following, random-wandering, random-turning.

|

|

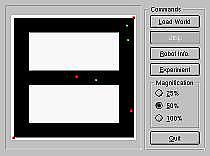

Internal Map (AVI, 1.2MB)

The clip shows an internal, topological map of a robot.

The robot would decide to move to the right lower region

(indicated by a yello light bulb) because the region contains

more targets than robots/sensors.

|

|

Monitoring Program (AVI, 3.9MB)

The clip shows a monitoring program. The red dots are

robots/sensors, and the green dots are detected moving targets.

It shows that one robot is heading to south but change

direction to north because the right uppoer region is more crowd.

|

|

Region-based Approach 1 (AVI, 0.8MB)

The clip shows the actual screen shot of simulations.

The blue robots are fixed sensors, the yellow and green robots

are mobile sensors, and the purple robots are moving targets.

It shows the yellow robot stops following a moving target

and moves to the other crowd region because it would improve

the overall performance.

|

|

Region-based Approach 2 (AVI, 8.1MB)

The clip shows how robots cooperate together to track multiple

targets. There were too many targets in the current region, so

the other robot comes to the region and helps to track the

targets.

|

|

Region-based Approach 3 (AVI, 19.5MB)

The clip shows that the Region-based Approach makes a single

robot tracking more efficient, too. The robot initially tracks

two targets in the current region, but it moves to the next region

when one of the target moves to the next region, and the next

region becomes more crowd than the current one.

|

|

Movements within a Region 1 (AVI, 0.6MB)

The clips shows the movements of mobile sensors within a region.

Each robot keep following the current detected moving targets.

It shows how the green robot follow two targets at the same time

until they split.

|

|

Movements within a Region 2 (AVI, 10.2MB)

The clips shows that a robot always tries to maximize the

number of tracked targets by keeping a proper distance

from the center of the target group.

|

|

Local-following Strategy (AVI, 6.3MB)

The clip shows the worst case of the Local-following Strategy

, which is the case that most robots follows a single robot

who is isolated from other targets.

|

|

Region-based Strategy (AVI, 9.1MB)

The clip shows how the Region-based Strategy works in the

similar situation. Even though more than two robots happen to

track a single target, one of them would move to other region

because the robot density of the current region is way to higher

than the target density.

|

|

|

| Acknowledgements |

|---|

|

This work is supported in part by DARPA grant DABT63-99-1-0015 and

NSF grants ANI-9979457 and ANI-0082498.

|

|

|

|

|

|